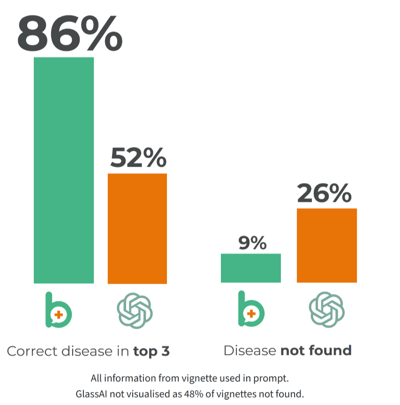

How smart is Bingli in comparison with Chat GPT / Glass AI?

Introduction

The use of generative artificial intelligence (AI) — specifically, large language models (LLMs) — has the potential to transform healthcare. Language modelling has revolutionized natural language processing by enabling computers to understand and generate human-like text. Among these models, LLMs have emerged as powerful tools in the field of AI.

What?

Evaluation of the accuracy and reproducibility of Bingli’s AI models

and compare its performance with LLMs (ChatGPT and GlassAI), using

vignettes to represent virtual patients

Why?

The European medical device regulation imposes strict criteria

around the validation of the accuracy and reproducibility of software

considered as decision support systems.

How?

572 vignettes

Test of accuracy and reproducibility with 572 virtual vignettes used by Bingli, transformed into prompts compatible with both ChatGPT via API integration and Glass-AI through theirdedicated application.

Example vignette

Target disease: Angina Pectoris

Age: 50

Gender: M

Main symptom: Chest pain on exertion

Additional symptom: Tachypnea

Accuracy

Tested by ranking of the target disease within the comprehensive array of 10 differential diagnoses, to assess each model's proficiency

in correctly identifying and prioritizing the specific ailment in question.

Reproducibility

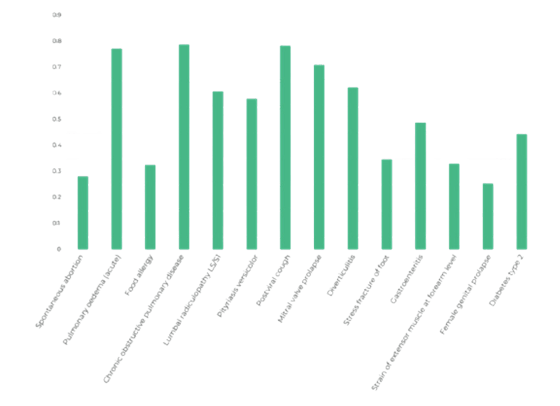

Empirical examination of the level of agreement observed among responses generated by an LLM when presented with ten identical prompts (dataset of 14 distinct cases), using Fleiss Kappa formula on incomplete blocks to calculate the concordance.

When testing the accuracy of models in finding a target disease based on simulated patient vignettes/prompts the specialized diagnostic AI platform Bingli is more accurate than ChatGPT and GlassAI in different test situations. Bingli always provides perfectly reproducible results

(the same input always produces the same output).

Although Europe’s MDR imposes strict criteria around the validation of the software’s accuracy and reproducibility, the inability to exactly reproduce output from is a specific concern in the healthcare context. In our reproducibility test, ChatGPT delivered only a moderate level of agreement (0.52 Kappa score).